Updated and migrated to Hugo Site.

In this post, I am going to show you how to queue up multiple instances of a shell script so that only one instance is active at a time. The other instances will queue up and run as soon as an active script finishes. I will also show you how to make sure that the scripts do not lock up for a very long time.

Background

Recently I came across a requirement in a project where a shell script had to run many times on a server. This script needed to use some local resource that could only be accessed by a single job at a time. I had to make sure that the multiple copies of the shell script were not active at the same time.

My traditional locking method for a bash script was to check for existence of the same script and exit. This approach was not going to work here as I wanted to queue up the jobs and not terminate.

Procedure

Instead of a check for process and exit approach, I used ‘flock’ to help queue up my jobs. This approach allowed multiple copies of the same script to wait for upto 5 minutes to acquire a lock.

My sample shell script is called myQueue.sh and the code is listed below.

#!/bin/bash

mySelf=$(basename $0)

lock="/var/lock/${mySelf}"

exec {fd}>$lock

flock --timeout 300 "$fd" || exit 1

echo "$$ ... Starting script..." | logger -t $mySelf

echo "$$ ... ... running" | logger -t $mySelf

sleep 10

echo "$$ ... ... sleeping" | logger -t $mySelf

echo "$$ ... Ending script..." | logger -t $mySelf

exit 0

Let us take a closer look at the code and how it works.

mySelf=$(basename $0)

lock="/var/lock/${mySelf}"I am defining a variable to get the current script name and I plan to create a lock file under /var/lock.

exec {fd}>$lock

flock --timeout 300 "$fd" || exit 1I use exec to get a file handle on the lock file I defined earlier. The {fd} variable gets an unused file handle for my file. The flock statement gets an exclusive lock on the file and I am willing to wait up to 300 seconds to acquire the lock. If this fails the script will exit.

Without the –timeout option it is possible that you may end up a infinite wait if you do not specify any other options.

echo "$$ ... Starting script..." | logger -t $mySelf

echo "$$ ... ... running" | logger -t $mySelf

sleep 10

echo "$$ ... ... sleeping" | logger -t $mySelf

echo "$$ ... Ending script..." | logger -t $mySelf

exit 0The rest of the code up to exit 0, is where your statements should be which need exclusive access to a resource. At the end of the script the previously acquired lock is released.

Note: If the script dies unexpectedly or if the server reboot, the lock is released.

In my sample code I added a sleep, some echo statements and I am using logger to output the echo to syslog.

Now I am going to submit a few copies of this job in the background.

$./myQueue.sh &

[1] 10665

$./myQueue.sh &

[2] 10673

$./myQueue.sh &

[3] 10676

$./myQueue.sh &

[4] 10679

$./myQueue.sh &

[5] 10682

$./myQueue.sh &

[6] 10685

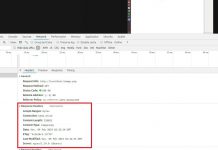

In the image below you can clearly see that only one instance is active after the flock and subsequent jobs start up only after an active job finishes.

Other cases

Say you wanted to modify this to do a traditional lock, where if you cannot get the lock, your script exits right away. Modify the flock as shown below to achieve this.

flock -n "$fd" || exit 1The -n option makes it fail rather than wait if the lock cannot be immediately acquired.

Another possibility is that you only want certain areas of your script to have exclusive access. You can modify your code as shown below

exec {fd}>$lock

flock --timeout 300 "$fd" || exit 1

# some task here that needs exclusive lock

flock -u "$fd"

# No more lock here

The -u option releases the previously acquired lock.

Conclusion

Using flock, I can queue up multiple instances of a shell script or terminate secondary copies. I am now actively using flock to control certain parts of my shell scripts.

Further reading

- https://stackoverflow.com/questions/8297415/in-bash-how-to-find-the-lowest-numbered-unused-file-descriptor

- https://linux.die.net/man/2/flock

Featured Image Photo Credit:

Shahadat Shemul