Background

When your service runs on an Auto Scale Group, it is very easy to add schedules to it to scale the service at a given time. However, what can we do if the service was running in containers on ECS instances. Scaling ECS container instances will not make any difference as you have to also change the desired count for the ECS service.

In my case, for a particular service, I knew that the load goes up at a predefined time everyday and I wanted to be proactive about having enough containers already running at that time. I did not want to wait for a service alarm to trigger a scale event. I want it done before load goes up.

There are a few ways we can solve his problem:

- Using aws cli, you can run a cron job from a server and change the desired count for the ECS service.

- You could run a scheduled ECS task, which modifies the desired count.

- Configure a Cloudwatch Event to trigger a lambda at a predefined time which will scale your ECS service.

The first option needs an EC2 server with a cronjob. You have to make sure this server is running at least at the time your job needs to be submitted.

The second approach needs you to build a docker container with some code, create an ECS task and then schedule it. It can be done but it would take time.

The third option in my opinion is fast and does not need any container or ECS task or EC2 instance to accomplish this.

Requirements

- I am using Serverless Framework, make sure you have this installed on your workstation.

- To keep my serverless.yml short and to the point, I am using a predefined IAM role.

Procedure

First step is to setup our work area. We do this by running the commands shown below.

sls create -v --template aws-python3 --path ecs-svc

cd ecs-svcHere you will see two files serverless.yml and handler.py, we are going to update these files with our code as shown below.

serverless.yml

service: ecssvc

provider:

name: aws

runtime: python3.7

role: arn:aws:iam::XXXXXXXXX:role/serverless

stage: prd

functions:

Scale-ECS-Svc:

handler: handler.scale_ecs_svc

memorySize: 128

events:

- schedule:

rate: cron(0 12 * * ? *)

enabled: true

input:

ecs_cluster: ecs

ecs_service: home-website

max_count: 8As mentioned above, I use a predefined role for my Lambda functions. Only Sys Admins are allowed to create IAM policy in my AWS account. Keep reading below to see a sample policy.

The serverless.yml defines the function. My script does not need a lot of RAM and the default in the framework is 1024, so I set it to the lowest possible value.

I want to keep my code generic enough to be able to use it for scaling other ECS services, therefore I pass the ECS cluster name, service name and a max count value to my function. If my containers are already scaled up, I do not wish them to keep adding more beyond a max value.

I will list a basic policy that you could use. This one gives way too many permissions and I suggest you carefully craft the correct policy for a production environment.

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": [

"ecs:*",

"ec2:*"

],

"Resource": [

"*"

]

},

{

"Effect": "Allow",

"Action": [

"logs:CreateLogGroup",

"logs:CreateLogStream",

"logs:PutLogEvents"

],

"Resource": "*"

}

]

}The second file handler.py is where we will add the Python code for our Lambda function.

Note:

The code shown here is intended to demonstrate the procedure, it lacks error handling. Please add Exception handling as appropriate.

#handler.py

import json

import boto3

ecs = boto3.client('ecs')

def scale_ecs_svc(event, context):

if 'ecs_cluster' in event:

ecs_cluster=event['ecs_cluster']

if 'max_count' in event:

max_count=event['max_count']

if 'ecs_service' in event:

ecs_service=event['ecs_service']

resp = ecs.describe_services(

cluster=ecs_cluster,

services=[ecs_service]

)

desired_count = resp['services'][0]['desiredCount']

desired_count += 2

if desired_count <= max_count:

resp = ecs.update_service(

cluster=ecs_cluster,

service=ecs_service,

desiredCount=desired_count

)

body = {

"message": "Scaled up successfully!",

"desired_count": desired_count

}

response = {

"statusCode": 200,

"body": json.dumps(body)

}

else:

response = {

"statusCode": 200,

"body": "No scale done"

}

return responseAs you can see I am not checking for exceptions. You should handle conditions, if the ECS cluster name or service or count is invalid.

Deploy

After editing the code, go ahead and deploy your function using the commands shown below

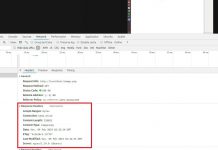

sls deployThe featured image in this post shows you sample output of the deployment done by the serverless framework. The deploy command converts your serverless.yml file into a CloudFormation template which builds your Lambda function and Cloudwatch Events rule.

You can now go to CloudFormation to see the stack information and what it created, go to Lambda to see your function, to Cloudwatch Event Rules to see the rule that was created.

If you need help setting up other Cloudwatch Events see my other post here. This post shows a step by step procedure of adding a new Cloudwatch Events rule.

Testing

You can either wait for your cron to schedule the invocation of your function or run it yourself from the AWS Lambda Console.

Configure a test event and set the data similar to this:

{

"ecs_cluster": "ecs",

"ecs_service": "home-website",

"max_count": 8

}

You should see a message in the console similar to one the shown in the image above.