Amazon Elastic Container Service – ECS allows you to easily run and scale containerized applications on AWS. You can launch multiple containers in matter of seconds provided you have the EC2 resources available in your cluster. ECS integration with other AWS services makes it ideal for converting your applications to containers and running them on ECS clusters.

Background

I am in the process of converting all our services to run in containers which are launched on ECS clusters backed by EC2 Spot instances. I run applications, scheduled tasks, websites and Tasks triggered by CloudWatch events which launch a container to handle the event and exit. Any task that I think is too long for a Lambda function, I run it on ECS.

With all these containers running and exiting, it becomes difficult to keep track of when a task was run and if the task failed to run due to a resource issue. AWS console allows you to lookup ECS container state changes, but I wanted to have my own audit log of all container state changes.

I accomplished this by using CloudWatch events, Lambda and DynamoDB. I used the serverless framework to publish my function and in this post I am going to show how you can setup a similar workflow.

This is similar to what I did for logging EC2 Instance state changes. See this post for details on how you could log state changes for EC2 servers.

Just like in my other post, I did not want to end up with hundreds of items in my DynamoDB table, so I also added a time to live for the items. This allows me to keep the size of my table small.

Requirements

- Make sure you have installed the Serverless Framework on your workstation.

- Make sure you have all the required IAM permissions to publish your function and create DynamoDB table.

Procedure

To create the template for our work, I run the commands as shown below.

sls create -v --template aws-python3 --path ecslogger

cd ecsloggerHere you will see two files serverless.yml and handler.py, I are going to update these files with our code as shown below.

service: ecslogger # NOTE: update this with your service name

provider:

name: aws

runtime: python3.7

environment:

DYNAMODB_TABLE: ${self:service}

stage: prd

iamRoleStatements:

- Effect: "Allow"

Action:

- dynamodb:Query

- dynamodb:Scan

- dynamodb:GetItem

- dynamodb:PutItem

- dynamodb:UpdateItem

Resource: "arn:aws:dynamodb:${opt:region, self:provider.region}:*:table/${self:provider.environment.DYNAMODB_TABLE}"

functions:

ecslogger:

handler: handler.ecslogger

memorySize: 128

timeout: 5

events:

- cloudwatchEvent:

event:

source:

- "aws.ecs"

detail-type:

- "ECS Task State Change"

enabled: true

# you can add CloudFormation resource templates here

resources:

Resources:

TodosDynamoDbTable:

Type: 'AWS::DynamoDB::Table'

Properties:

AttributeDefinitions:

-

AttributeName: taskDefinitionArn

AttributeType: S

-

AttributeName: time

AttributeType: S

KeySchema:

-

AttributeName: taskDefinitionArn

KeyType: HASH

-

AttributeName: time

KeyType: RANGE

ProvisionedThroughput:

ReadCapacityUnits: 1

WriteCapacityUnits: 1

TimeToLiveSpecification:

AttributeName: ttl

Enabled: 'TRUE'

TableName: ${self:provider.environment.DYNAMODB_TABLE}

Let us do a quick review of our serverless.yml file.

I am setting an environment variable which is the same name as the service. This name will be used to create a DynamoDB table. The table name being ecslogger.

iamRoleStatements is where I define what the Lambda can do with the DyanmoDB table that is going to be created.

The events section is where I define the CloudWatch Event. If you are only interested in some events, you can modify this section to trigger the rule only on them. I am interested in all ECS Task state changes.

In the resources section, I am setting up my DynamoDB table. My partition key is taskDefinitionArn and sort key is time. Time To Live is ttl and it is enabled.

Edit the handler.py file as shown below.

import json

import time

import boto3

dynamodb = boto3.resource('dynamodb', region_name='us-east-1')

table = dynamodb.Table('ecslogger')

days = 14*60*60*24

def ecslogger(event, context):

ttl = int(time.time()) + days

if 'taskDefinitionArn' in event['detail']:

item={

'taskDefinitionArn': event['detail']['taskDefinitionArn'],

'time': event['time'],

'ttl': ttl

}

if 'startedBy' in event['detail']:

item['startedBy'] = event['detail']['startedBy']

if 'desiredStatus' in event['detail']:

item['desiredStatus'] = event['detail']['desiredStatus']

if 'lastStatus' in event['detail']:

item['lastStatus'] = event['detail']['lastStatus']

if 'stoppedReason' in event['detail']:

item['stoppedReason'] = event['detail']['stoppedReason']

response = table.put_item(

Item=item

)

body = {

"message": "logged sucessfully!",

"input": event

}

response = {

"statusCode": 200,

"body": json.dumps(body)

}

return responseI am setting Time To Live as 14 days and I only store some of the data passed to the Lambda function. You can add other fields from the event that make sense to you.

The code above is simple and lacks error/exception handling. Please add appropriate exception handling to suit your needs. Modify the region to make sure it matches where you do your work.

Deploy

After editing the code, go ahead and deploy your function using the command shown below

sls deployThis will trigger the process of creating the CloudFormation scripts which in turn will create a Lambda Function, an IAM role for your function and a DynamoDB table with Time to Live enabled.

After the resource creation completes, go to CloudFormation to see the stack information and what was created, to Lambda to see your function, to Cloudwatch Event Rules to see the rule that was created and finally the DynamoDB table.

Testing

If you have an ECS service that you can scale, go ahead and make a change to it. This will trigger the CloudWatch Event, which in turn will run the Lambda function to store state change in DynamoDB. Go to your DynamoDB console and query the table ecslogger to see the logged events.

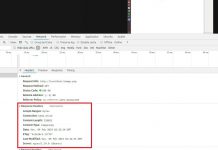

Here is a screenshot of data from my DynamoDB table.

If you no longer wish to keep this function you can run

sls removeThis will remove all the resources that were created earlier.

Further Reading:

- Serverless Docs

- DynamoDB Time To Live

- CloudWatch Events

- AWS Lambda

- AWS Lambda Best Practices

- AWS IAM

- AWS ECS