I usually use Linux utilities like grep and awk to analyze requests coming to my blog server. These utilities provide a good view into your traffic logs, but still it is not easy to aggregate results or analyze the logs with more depth.

I wanted the ability to query my log data using SQL like queries, but not have to pay for a database. Amazon Athena is an interactive query service that makes it easy to analyze data in Amazon S3 using standard SQL.

Athena is serverless, so there is no need to have a dedicated database and no infrastructure to manage. It provides a cost effective way to run queries against data in S3 buckets.

In this post I am going to demonstrate how easy it is to setup a workflow to copy web server log files to S3 and use Athen to query data using SQL queries.

Procedure

We have three main tasks to complete that will allow us to run SQL like queries against Apache log files.

- Set a meaningful Apache Log Format and collect logs

- Copy Logs from WordPress (or any other Apache) server to AWS S3 bucket

- Setup Athena to query from S3 bucket

Apache Log Format

On a server packaged by Bitnami, the Apache httpd.conf file is at /opt/bitnami/apache2/conf/httpd.conf. Open this file in your favorite editor and search for the string LogFormat.

I am using a custom Log Format as I want to log the Client IP address being forwarded by Cloudflare, the hostname and the SSL Protocol of the incoming request.

The Hostname field helps in case you are running multiple Hosts on your server. In my case I host skbali.com and blog.skbali.com on the same instance.

Let us take a look at the Apache LogFormat I have defined in my httpd.conf file.

<IfModule log_config_module>

#

# The following directives define some format nicknames for use with

# a CustomLog directive (see below).

#

LogFormat "%h %l %u %t \"%r\" %>s %b \"%{Referer}i\" \"%{User-Agent}i\"" combined

LogFormat "%h %l %u %t \"%r\" %>s %b" common

LogFormat "%h %l %u %t \"%r\" %>s %b \"%{Referer}i\" \"%{User-Agent}i\" %{CF-Connecting-IP}i \"%{Host}i\" \"%{SSL_PROTOCOL}x\"" myCustom

CustomLog "logs/access_log" myCustom

</IfModule>The new LogFormat added by me is called myCustom. As you can see I added three new fields to the existing Log Format.

After making edits, remember to restart your Apache instance.

/opt/bitnami/ctlscript.sh restart apacheThe new logFormat should be visible in your access_log file. Here is a sample from my access_log

172.xx.xx.xx - - [31/Jan/2021:21:47:08 +0000] "HEAD / HTTP/1.1" 200 - "https://blog.skbali.com" "Mozilla/5.0+(compatible; UptimeRobot/2.0; http://www.uptimerobot.com/)" 63.xx.xx.xx "blog.skbali.com" "TLSv1.3"

Copy Logs To S3 Bucket

If you have an existing S3 bucket, you can use that or you can create a new bucket using the console or follow my post to create an S3 bucket using Terraform.

You will need AWS CLI installed on your instance and create a named profile.

It is best to create a profile for a new user with the least privileges. In my case I created a user called blog and added no permissions to it.

The policy to give access to your bucket is added in the S3 bucket. Here is a template of the policy I used. Edit for your bucket name and AWS account number.

Keep in mind S3 bucket names are globally unique and the name listed here is an example and does not belong to me.

{

"Version": "2008-10-17",

"Id": "Policy1357935677554",

"Statement": [

{

"Sid": "Stmt1357935647218",

"Effect": "Allow",

"Principal": {

"AWS": "arn:aws:iam::123456789012:user/blog"

},

"Action": "s3:ListBucket",

"Resource": "arn:aws:s3:::my-blog-logs"

},

{

"Sid": "Stmt1357935676138",

"Effect": "Allow",

"Principal": {

"AWS": "arn:aws:iam::123456789012:user/blog"

},

"Action": [

"s3:GetObject",

"s3:PutObject"

],

"Resource": "arn:aws:s3:::my-blog-logs/*"

}

]

}The next step is to write a shell script that will copy your Apache access log files to S3 bucket. On your server run the following commands.

mkdir ~/bin ~/apache-logs

touch ~/bin/s3backup.sh

chmod u+x ~/bin/s3backup.shUsing your favorite editor, edit the file ~/bin/s3backup.sh and paste the contents as shown

#!/bin/bash -x

rsync -av --include "access*" --exclude "*" /opt/bitnami/apache2/logs/ /home/bitnami/apache-logs

aws s3 sync /home/bitnami/apache-logs s3://sbali-blog-logs/apache-logs --profile blogThis script uses rsync to make a local copy of your logs and upload the logs to S3. If you do not want to have a local copy of the logs, you can remove the rsync and edit the aws s3 sync command to directly copy files from the logs folder.

Note: Pay attention to the named profile you create and use in script. Mine is called blog and referenced as –profile blog

$ aws sts get-caller-identity --profile blog

{

"UserId": "XXXXXXXXXXXXXXXX",

"Account": "123456789012",

"Arn": "arn:aws:iam::123456789012:user/blog"

}

If you need help setting up a named profile, leave me a comment below and I will share detailed steps.

Next step is to edit the cron and set a schedule for our log transfer. Run the command crontab -e to open up your cron schedule and add the line as shown and save it.

15 * * * * [ -x /home/bitnami/bin/s3backup.sh ] && /home/bitnami/bin/s3backup.sh > /tmp/s3backup.log 2>&1The above schedule will run your script to copy log files to S3 bucket, 15 mins past the hour, every hour.

To ensure you do not have any errors, you should run your job at least once from the command line as I have done.

$ ~/bin/s3backup.sh

+ rsync -av --include 'access*' --exclude '*' /opt/bitnami/apache2/logs/ /home/bitnami/apache-logs

sending incremental file list

access_log

sent 657,918 bytes received 35 bytes 1,315,906.00 bytes/sec

total size is 657,646 speedup is 1.00

+ aws s3 sync /home/bitnami/apache-logs s3://my-blog-logs/apache-logs --profile blog

upload: apache-logs/access_log to s3://my-blog-logs/apache-logs/access_log

Athena Setup

The first step to using Athena is to setup a table corresponding to the logs files copied to S3. This can be done from the AWS Athena console using a wizard or by running a query.

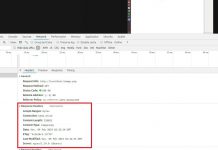

I created my table by running the query shown below. Copy the query to the ‘New query 1’ window and click on the button ‘Run query’.

CREATE EXTERNAL TABLE IF NOT EXISTS default.blog_access_logs (

`ip` string,

`field_l` string,

`field_u` string,

`time` string,

`request_url` string,

`status` string,

`size` string,

`referer` string,

`user_agent` string,

`cf_connecting_ip` string,

`domain_name` string,

`ssl_protocol` string

)

ROW FORMAT SERDE 'org.apache.hadoop.hive.serde2.RegexSerDe'

WITH SERDEPROPERTIES (

'serialization.format' = '1',

'input.regex' = '([^ ]*) ([^ ]*) ([^ ]*) (?:-|\\[([^\\]]*)\\]) ([^ \\\"]*|\\\"[^\\\"]*\\\") (-|[0-9]*) ([^ ]*) \\\"([^ ]*)\\\" \\\"([^\\\"]*)\\\" ([^ ]*) \"([^ ]*)\\\" \\\"([^ ]*)\\\"'

) LOCATION 's3://my-blog-logs/apache-logs/';The above table definition corresponds to the Log Format being used by me. In your case, if you do not have Cloudflare, you can edit out the cf_connecting_ip field and the corresponding regex as well.

After the query is run, your table should be visible on the left pane. At this point you should be able to run queries to analyze your web traffic. An example is shown here

Here are some simple query examples to see your server traffic.

-- Count Request by Cloudflare client ip

SELECT cf_connecting_ip,

count(*)

FROM blog_access_logs

WHERE cf_connecting_ip != '-'

GROUP BY cf_connecting_ip

ORDER BY 2 DESC ;

-- See all user agents reaching your server

SELECT DISTINCT user_agent

FROM blog_access_logs

-- Request count by SSL Protocol

SELECT ssl_protocol,

count(*)

FROM blog_access_logs

GROUP BY ssl_protocol

ORDER BY 2 DESC ;

-- All requests from one client ip

SELECT *

FROM blog_access_logs

WHERE cf_connecting_ip = '1.1.1.1' ;

-- By date

SELECT *

FROM "default"."blog_access_logs"

WHERE time LIKE '02/Feb/2021%' limit 100;

Athena can handle complex queries as well, so do try out queries that make more sense to you.

Note: There is a cost associated with uploading files to S3, keeping files in S3 and running Athena queries. You are responsible for all charges incurred in your account. See Athena Pricing.

Hope this post was helpful. Let me know if you have any questions or need help with this by commenting below.

Further Reading

Photo Credit

Photo by Cookie the Pom on Unsplash